Web Design: Essential Website Development Tips for Beginners

Building a website is a crucial step for any business or personal project. Web development is complex, with the right

For many businesses, ranking well on Google search is essential for their SEO strategy. A good presence on Google can attract more organic traffic and enhance visibility. Keyword research and tracking tools have become essential for SEO experts. However, recent actions by Google to block web scrapers have surprised the industry.

The SEO world ran into serious issues when several keyword tracking tools stopped working. This affected many well known SEO tools like Semrush. They were unable to provide accurate and up-to-date keyword rankings. This problem highlighted how much rank checker tools rely on scraping Google search results. It also revealed how weak these methods can be due to changes made by search engines like Google.

Google has changed how its search engine works. Now, you need to have JavaScript enabled on your browser to search anything on google. This might feel like a small update, but it can greatly help in detecting bots. Bots often do not run JavaScript all the way. This makes it easier for Google to discover and block them.

This change is meant to improve the user experience. It helps lessen the load on Google’s servers. Additionally, it stops harmful bots from disrupting search results and Google Ads. However, this also makes things harder for several ranking tools. These tools used to gather data from Google’s search engine results pages (SERPs).

Google’s policy to enable Javascript in browser helps it see the difference between real users using regular browsers and automated scripts that send requests.

The integration of JavaScript-based traffic authentication changed the SEO industry fast. Marketing tools that once collected Google search results for keyword rankings ran into big problems. These problems meant that businesses and SEO experts could not get the important data needed for their marketing strategies.

Keyword rank tracking is very important for understanding how SEO is performing. Without this data, it is hard to know if SEO campaigns are effective, monitor keyword progress and understand search engine rankings. The sudden changes from Google showed how crucial it is to have reliable and adaptable SEO tools.

Developers of keyword tracking tools are trying to find new ways to scrape Google SERP because of new Javascript rule. Here are some ideas they could consider:

Google’s recent changes show that search engines now care more about user experience. They want to stop unfair SEO tricks. This means that SEO plans should focus on real user engagement, keyword opportunities and making useful content. It should not just aim for good keyword rankings.

The recent events have made it difficult for keyword tracking tools. Still, this situation brings a chance for new ideas. By adapting to Google’s improved bot detection, using different data sources, and possibly teaming up with search engines, these tools can remain useful in the SEO toolkit.

Keyword rank checkers can find clever ways to bypass Google’s new protections. This can help them avoid detection as bots. They might use methods such as:

Tool developers can build better scraping tools that can avoid Google’s defenses. These tools can still be fair and stick to the rules.

Many popular keyword tracking tools like Ahrefs, Moz or SE Ranking are managing the challenges from Google’s updates. They are using various data sources. They are also improving their scraping methods and paying attention to user experience. Because of these efforts, these tools remain essential for SEO experts.

Ahrefs is a popular SEO tool that gives good data and many great features. The platform is always improving its algorithms to keep its SEO and marketing tool precise. Ahrefs’ rank tracker gathers data from many places. This includes a big list of backlinks and search engine results pages. It helps to show how well keywords are doing.

Ahrefs offers more than just rank tracking. Its keyword research tools are very detailed. These tools help users discover valuable keywords that face less competition. They consider several factors, such as search intent, keyword difficulty, and traffic potential.

Semrush is a popular marketing platform. It provides several tools for SEO. These tools include keyword research, competitor analysis, and rank tracking. Semrush is also updating how it collects data to match changes in search.

The platform is well-known for having a big database of keywords and ranking data. It collects this information from different SERP features and collaborations. Semrush combines data from its various tools. This helps you see all about keyword performance, like organic search volume, paid search data, and insights on content marketing, altogether .

Moz is a well-known brand that provides a comprehensive SEO tool to monitor your competitors’ keyword, research for new keywords and find opportunities for backlinks. Recently, due to Google’s actions against scraping, Moz is focusing more on user data.

Moz Pro is a set of tools for SEO. With Moz Pro, you can track keyword rankings on various search engines. You can also check your website’s SEO health.

SERPWatcher is a keyword rank tracker developed by Mangools. It stands out because it tracks ranks in real time and is easy to use. Even when Google makes changes, SERPWatcher provides daily rank tracking updates. This feature helps users monitor how their keywords are doing and notice changes immediately.

The tool displays information in easy-to-read charts and graphs. This allows users to see keyword trends and track their progress easily.

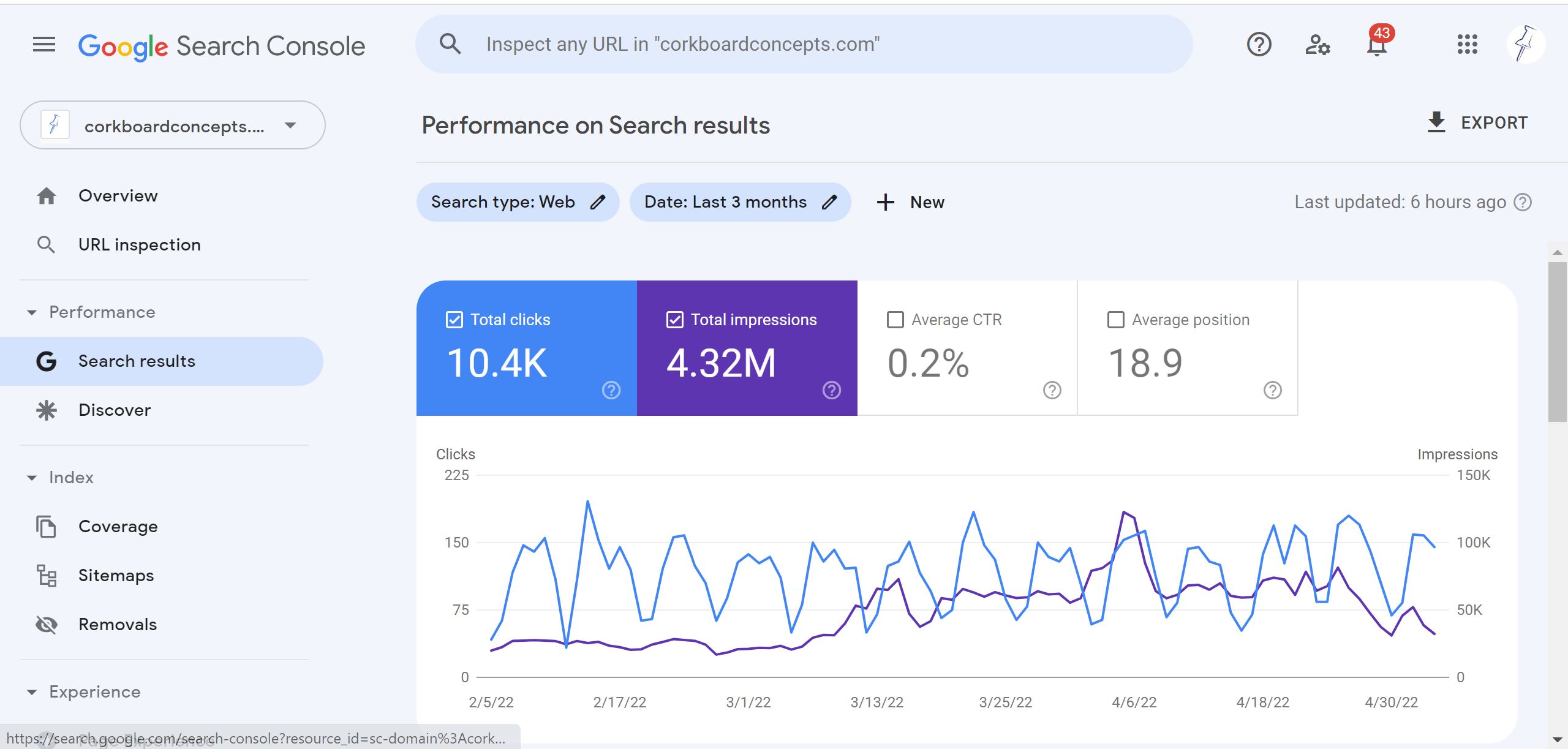

Google Search Console, formerly known as Google Webmaster Tools, is a free service provided by Google (along with google analytics) that helps website owners monitor their seo keywords in Google search results. It offers valuable insights into how Google views your site, identifies any issues that may be impacting your site’s performance in search results, and provides data on how users are interacting with your site.

One of the key features of Google Search Console is the ability to see which keywords are driving traffic to your site and how your pages are ranking for those keywords. This information can help you optimize your content to better align with what users are searching for.

In conclusion, Google’s steps against large scraping tools have changed how SEO and keyword tracking function. Now, it’s crucial to adjust and think of new ideas.

As we move ahead, it’s key for these tools to develop and follow Google’s guidelines. This will help them remain relevant and do well in the fast-changing sector of digital marketing. Stay updated, stay adaptable, and keep winning.

Building a website is a crucial step for any business or personal project. Web development is complex, with the right

Outsource social media management services has become a vital part of business success, with the right agency or freelancer you

Ready to elevate your business? Let’s talk about creating a website, launching campaigns, or boosting your online presence with tailored solutions.